The Mil & Aero Blog

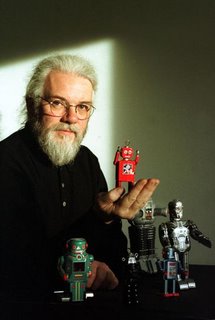

Posted by John KellerLast month I posted a blog item headlined Autonomous arms race: gentlemen, start your robots, in which I took a university professor in Sheffield, England, named Noel Sharkey to task for views on military robotic technology he presented in a keynote address to the Royal United Services Institute. The gist was I believe robots are, and will be, valuable tools for military planners. Professor Sharkey, on the other hand, says not so fast; we need to take a closer look at the human issues of deploying military robotic technology before we go much farther down this road. This morning I received a long and very thoughtful response from Professor Sharkey to my blog item. We still disagree on a variety of issues, but that's beside the point of today's blog. Today I'd simply like to share Professor Sharkey's thoughts with you, and let his points speak for themselves. You make some valid points here John but I think that you have slanted my line of reasoning a little in the wrong direction. I will make my main case again here.

I do not have a problem with "a man in the loop" robots. It is dumb autonomous robots that I am concerned about. I am not politically active and I am not anti military. Laying my cards face up on the table, my position is simply that it is the duty and moral responsibility of all citizens to protect innocent people everywhere, regardless of creed, race, or nationality. All innocents have a right to protection.

There are many breaches of this right that fall outside of my remit. I concern myself solely with new weapons that are being constructed using research from my own field of enquiry.

My concerns arise from my knowledge of the limitations of artificial intelligence and robotics. I am clearly not calling for a ban on all robots as I have worked in the field for nearly 30 years.

I don't blame you for not believing me about the use of fully autonomous battle robots. I found it hard to believe myself until I read the plans and talked to military officers about them. Just Google search for military roadmaps to help you get up to speed on this issue. Read the roadmaps for Air Force, Army, and Navy published in 2005 or the December, 2007 Unmanned Systems Roadmap 2007-2032 (large .pdf) and you might begin to see my concerns.

In a book entitled Autonomous Vehicles in Support of Naval Operations published by The National Academies Press in 2005, a Naval committee wrote that, "The Navy and Marine Corps should aggressively exploit the considerable warfighting benefits offered by autonomous vehicles (AVs) by acquiring operational experience with current systems and using lessons learned from that experience to develop future AV technologies, operational requirements, and systems concepts."

The signs are there that such plans are falling into place. On the ground, the U.S. Defense Advanced Research Projects Agency (DARPA) in Arlington, Va., ran a successful Grand Robotics challenge for four years in an autonomous vehicle race across the Mojave Desert. In 2007 it changed to an urban challenge where autonomous vehicles navigated around a mock city environment. You don't have to be too clever to see where this is going. In February this year DARPA showed off their "Unmanned Ground Combat Vehicle and Perceptor Integration System" otherwise knows as the Crusher. This a 6.5 ton robot truck, nine feet wide with no room for passengers or a steering wheel, which travels at 26 miles per hour. Stephen Welby, director of DARPA Tactical Technology office said, "This vehicle can go into places where, if you were following in a Humvee, you'd come out with spinal injuries," Carnegie Mellon University Robotics Institute is reported to have received $35 million over four years to deliver this project. Admittedly it is a just a demonstration project at present.

Last month BAE Systems tested software for a squadron of planes that could select their own targets and decide among themselves which target each should acquire. Again a demonstrations system but the signs are there.

There are a number of good military reasons for such a move. Teleoperated systems are expensive to manufacture and require many support personnel to run them. One of the main goals of the Future Combat Systems project is to use robots as a force multiplier so that one soldier on the battlefield can be a nexus for initiating a large-scale robot attack from the ground and from the air.

Clearly, one soldier cannot operate several robots alone. Autonomous systems have the advantage of being able to make decisions in nanoseconds while humans need a minimum of hundreds of milliseconds.

FCS spending is going to be in the order of $230 billion, with spending on unmanned systems expected to exceed $24 billion ($4 billion up to 2010).

The downside is that autonomous robots that are allowed to make decisions about whom to kill falls foul of the fundamental ethical precepts of a just war under jus in bello as enshrined in the Geneva and Hague conventions and the various protocols set up to protect innocent civilians, wounded soldiers, the sick, the mentally ill, and captives.

There is no way for a robot or artificial intelligence system to determine the difference between a combatant and an innocent civilian. There are no visual or sensing systems up to that challenge. The Laws of War provide no clear definition of a civilian that can be used by a machine. The 1944 Geneva Convention requires the use of common sense while the 1977 Protocol 1 (Article 50) essentially defines a civilian in the negative sense as someone who is not a combatant. Even if there were a clear definition, and even if sensing were possible, human judgment is required to identify the infinite number of circumstances where lethal force is inappropriate. Just think of a child forced to carry an empty rifle.

The Laws of War also require that lethal force be proportionate to military advantage. Again, there is neither sensing capability that would allow a robot such a determination, nor is there any known metric to objectively measure needless, superfluous, or disproportionate suffering. This requires human judgment. Yes, humans do make errors and can behave unethically, but they can be held accountable. Who is to be held responsible for the lethal mishaps of a robot? Certainly not the machine itself. There is a long causal chain associated with robots: the manufacturer, the programmer, the designer, the Department of Defense, the generals or admirals in charge of the operation, and the operator.

This is where your analogy with gun control breaks down. If someone kills an innocent with a gun, the shooter is responsible for the crime. The gun does not decide whom to kill. If criminals decided to put guns on robots that wandered around shooting innocent people, do you thing that the good citizens should also put guns on robots to go around shooting innocent people? It does not make sense.

The military forces in the civilized world do not want to kill civilians. There are strong legal procedures in the United States and JAG [the military's Judge Advocate General] has to validate all new weapons. My worry is that there will be a gradual sleep walk into the use of autonomous weapons like the ones that I mentioned. I want us to step back and make the policies rather than let the policies make themselves.

I take you point (and greater expertise than mine) about the slowness of politics and the UN. But we must try. There are no current international guidelines or even discussions about the uses of autonomous robots in warfare. These are needed urgently. The present machines could be little more than heavily armed mobile mines and we have already seen the damage that land mines have caused to children at play. Imagine the potential devastation of heavily armed robots in a deep mission out of radio communication. The only humane recourse of action is to severely restrict or ban the deployment of these new weapons until it can be demonstrated that they can pass an "innocents discrimination test" in real life circumstances.

I am pleased that you have allowed this opportunity to debate the issues and put my viewpoint forward.

best wishes,

Noel

<< Home

|

|

Welcome to the lighter side of Military & Aerospace Electronics. This is where our staff recount tales of the strange, the weird, and the otherwise offbeat. We could put news here, but we have the rest of our Website for that. Enjoy our scribblings, and feel free to add your own opinions. You might also get to know us in the process. Proceed at your own risk.

John Keller

John Keller is editor-in-chief of Military & Aerospace Electronics magazine, which provides extensive coverage and analysis of enabling electronic and optoelectronic technologies in military, space, and commercial aviation applications. A member of the Military & Aerospace Electronics staff since the magazine's founding in 1989, Mr. Keller took over as chief editor in 1995.

Courtney E. Howard

Courtney E. Howard is senior editor of Military & Aerospace Electronics magazine. She is responsible for writing news stories and feature articles for the print publication, as well as composing daily news for the magazine's Website and assembling the weekly electronic newsletter. Her features have appeared in such high-tech trade publications as Military & Aerospace Electronics, Computer Graphics World, Electronic Publishing, Small Times, and The Audio Amateur.

John McHale

John McHale is executive editor of Military & Aerospace Electronics magazine, where he has been covering the defense Industry for more than dozen years. During that time he also led PennWell's launches of magazines and shows on homeland security and a defense publication and website in Europe. Mr. McHale has served as chairman of the Military & Aerospace Electronics Forum and its Advisory Council since 2004. He lives in Boston with his golf clubs.

|

Courtney E. Howard is senior editor of Military & Aerospace Electronics magazine. She is responsible for writing news stories and feature articles for the print publication, as well as composing daily news for the magazine's Website and assembling the weekly electronic newsletter. Her features have appeared in such high-tech trade publications as Military & Aerospace Electronics, Computer Graphics World, Electronic Publishing, Small Times, and The Audio Amateur.

Courtney E. Howard is senior editor of Military & Aerospace Electronics magazine. She is responsible for writing news stories and feature articles for the print publication, as well as composing daily news for the magazine's Website and assembling the weekly electronic newsletter. Her features have appeared in such high-tech trade publications as Military & Aerospace Electronics, Computer Graphics World, Electronic Publishing, Small Times, and The Audio Amateur.

John McHale is executive editor of Military & Aerospace Electronics magazine, where he has been covering the defense Industry for more than dozen years. During that time he also led PennWell's launches of magazines and shows on homeland security and a defense publication and website in Europe. Mr. McHale has served as chairman of the Military & Aerospace Electronics Forum and its Advisory Council since 2004. He lives in Boston with his golf clubs.

John McHale is executive editor of Military & Aerospace Electronics magazine, where he has been covering the defense Industry for more than dozen years. During that time he also led PennWell's launches of magazines and shows on homeland security and a defense publication and website in Europe. Mr. McHale has served as chairman of the Military & Aerospace Electronics Forum and its Advisory Council since 2004. He lives in Boston with his golf clubs.